はじめに

Kubernetes上でストレージを管理するツールにRookがあります。RookはCloud Native Storageを実現するツールの一つとして、CNCF IncubatingのステータスにあるOSSです。しかし、私は「そもそもCloud Native Storageって何?」というところから出発したため、そもそもCloud Native Storageとは何かから始め、Rookはそれをどう実現するか、Rookで何ができるか、Rookの下で主に利用するCephとは何か、などを調べましたので、今回はその辺について紹介いたします。

Cloud Native Storageとは

CNCF より

そもそもCloud Native Storageとは何か、CNCFで公開している以下のページで紹介されています。

CNCF - Introduction to Cloud Native Storage

アプリケーションをCloud Nativeな環境に移行する際、Cloud Nativeな環境に最適化する必要のあるパートがいくつか存在します。その一つがストレージです。

コンテナが起動しているホスト上でストレージを利用する際、例えばHost Mountで実行すると、ホスト間でのデータの一貫性は保てず、また対象のホストが停止・再起動するとデータにアクセスできなくなります。またStatefulSetを利用すると、コンテナとホストとの結びつきが強くなり、柔軟性が損なわれます。このような使い方はCloud Native Storageとは言えないでしょう。

※スライド - Introduction to Cloud Native Storageより抜粋

KubernetesにはPersistent Volumeという機能があります。Persistent VolumeはKubernetes上のPodが利用する永続化ストレージの機能を、外部ストレージなどと連携して提供するものです。Persistent Volumeにより、コンテナ、Pod、ホスト間でデータを維持することが可能になります。またアプリケーションが利用する最適なストレージを選択することができるようになります。

※スライド - Introduction to Cloud Native Storageより抜粋

またCloud Native Storageであるかどうかは、以下のようなポイントで判断されるようです。

- コンテナオーケストレーション、ランタイム間で相互運用可能

- ストレージのサイズ等重要な要素の共通抽象化

- スナップショットなどの共通データサービス

- RBAC

- ストレージの性能・容量を柔軟に変更できる

- ストレージのライフサイクル・運用を自動化できる

- ハードウェアに依存しない

etc...

※スライド - Introduction to Cloud Native Storageより抜粋

StorageOS記事より

CNCFの記事とは別の記事として、StorageOSの以下の記事では、以下の8つの原則を、Cloud Native Storageの満たすべき原則として紹介しています。

StorageOS - StorageOS Vision for Cloud Native Storage for Today’s Modern IT

- Application Centric

- Application Platform Agnostic

- Declarative and Composable

- ストレージリソースは、アプリケーションやサービスが要求する他のリソースと同様に、宣言的リソースとして構成されるべきであり、それによってストレージリソースやサービスが、オーケストレーターによるアプリケーション立ち上げの一部としてデプロイされるようになります。

- API Driven and Self-managed

- ストレージリソース・サービスはAPIを通じて容易に利用・管理されるべきです。またストレージはアプリケーションランタイムやオーケストレータープラットフォームと統合して利用できるべきです。

- Agile

- プラットフォームは環境の変化に動的に応じるべきであり、以下のようなことが可能であるべきです:

- ロケーション間でアプリケーションを移動させる

- アプリ等の成長に応じて動的にボリュームのサイズを変更する

- データ保持、データの急速な回復のためにデータをコピーする時間をとる

- 動的・急激に変化するアプリケーションの環境に統合される

- プラットフォームは環境の変化に動的に応じるべきであり、以下のようなことが可能であるべきです:

- Natively Secure

- ストレージサービスは暗号化やRBACといったインラインのセキュリティ機能と統合し、アプリケーションデータを保護する別の製品に依存するべきではありません。

- Performant

- ストレージプラットフォームは、複雑な分散環境の中で予測可能な性能を要求するべきであり、最小限のコンピュータリソースにより効率的にスケールするべきです。

- Consistently Available

- ストレージプラットフォームは、アプリケーションデータの高可用性・耐久性・一貫性を保証するために、予測可能で信用できるモデルに基づき、データ分布を管理するべきです。データ損失からの回復はアプリケーションから独立しており、通常のアプリケーション運用に影響しないべきです。

Rookとは

Cloud Native Storageを実現するためのツールとして、Rookは主にストレージ運用の自動化の部分を実現します。

RookはKubernetes向けストレージオーケストレーターであり、Kubernetes Operatorとして機能します。それにより、分散ストレージシステムの管理・スケーリング・復旧を自動で実行することができます。

RookはあくまでOperatorとしてストレージプロバイダーを管理します。ストレージプロバイダーとして利用できるのは、現在以下の通りです。2019年10月現在では、CephとEdgeFSのみが安定版として利用できます。

| ストレージプロバイダ | status |

|---|---|

| Ceph | stable |

| EdgeFS | stable |

| CockroachDB | alpha |

| Cassandra | alpha |

| Minio | alpha |

| NFS | alpha |

| YugaByteDB | alpha |

| Rook Framework | alpha |

Cephとは

Cephは分散ストレージソフトウェアの一つで、複数のノードを一つのストレージプールとして扱います。ストレージプールに対してのアクセス方式、つまりどのストレージ方式を利用するかは柔軟に選択でき、以下の中から選択できます。

- ファイルストレージ: データをフォルダー内のファイル階層として整理・提示。共有ファイルストレージなどで利用

- ブロックストレージ: データを任意の均等な大きさのボリュームにまとめて整理。トランザクションデータなどで利用

- オブジェクトストレージ: データを管理し、関連付けられたメタデータにリンク。

Cephのアーキテクチャは大まかには以下のようになります。

- インターフェイス

- RADOSGW:オブジェクトストレージ用。

- RBD:ブロックストレージ用。

- CEPH FS:ファイルストレージ用。

- CRUSH

- Controlled Replication Under Scalable Hashing。オブジェクトの格納先を決定するために利用するアルゴリズム。

- Cephストレージクラスター

※参考リンク:

Fujitsu - 分散ストレージ ソフトウェア Ceph(セフ)とは

Cybozu Inside Out - 分散ストレージソフトウェアCephとは

Operatorとは

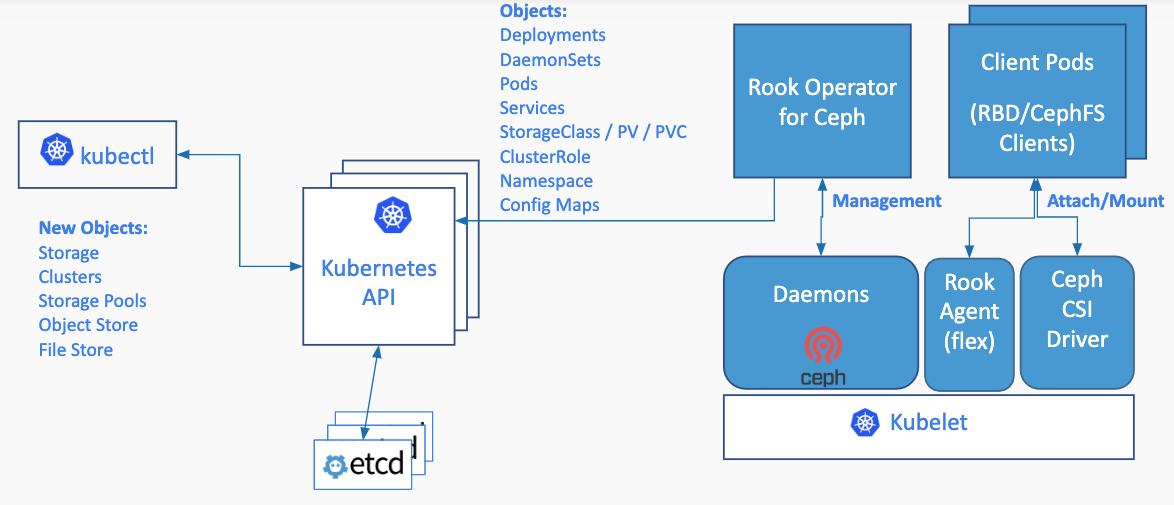

RookはKubernetes Operatorとして提供されています。OperatorとはKubernetes上のアプリケーションを管理する方法の一つであり、運用ナレッジを反映させ、アプリケーションの更新・バックアップ・スケーリングなどを自動化することができます。

Operatorは、Kubernetes APIを拡張し、Statefulなアプリケーションの管理をする、アプリケーション固有のKubernetes Controllerです。WebアプリやAPIサービスなどStatelessなサービスは、Kubernetesがもともと提供する機能を利用することで、障害からの回復などの運用を自動化することができます。しかし一方で、データベースや監視システムなどStatefulなサービスは、データの保護などの観点から、アプリケーションごとに正しい運用をする必要があります。Operatorはアプリケーションごとのドメイン知識を組み込むため、Kubernetes APIを拡張し、ユーザーが開発することで運用の自動化を実現します。

OperatorはResourceとControllerというKubernetesのコンセプトを自前で定義することで利用できます。ただOperatorを一から実装するのは労力がかかるため、複数のフレームワークが提供されています。RookではOLM (Operator Lifecycle Manager)を利用しており、どのNamespaceにどのOperatorをデプロイするか、どのユーザーがOperatorを利用できるかを制御することができます。

※参考リンク:

CoreOS Blog - Introducing Operators: Putting Operational Knowledge into Software

Github - Operator Lifecycle Manager

Rook-Ceph

アーキテクチャ

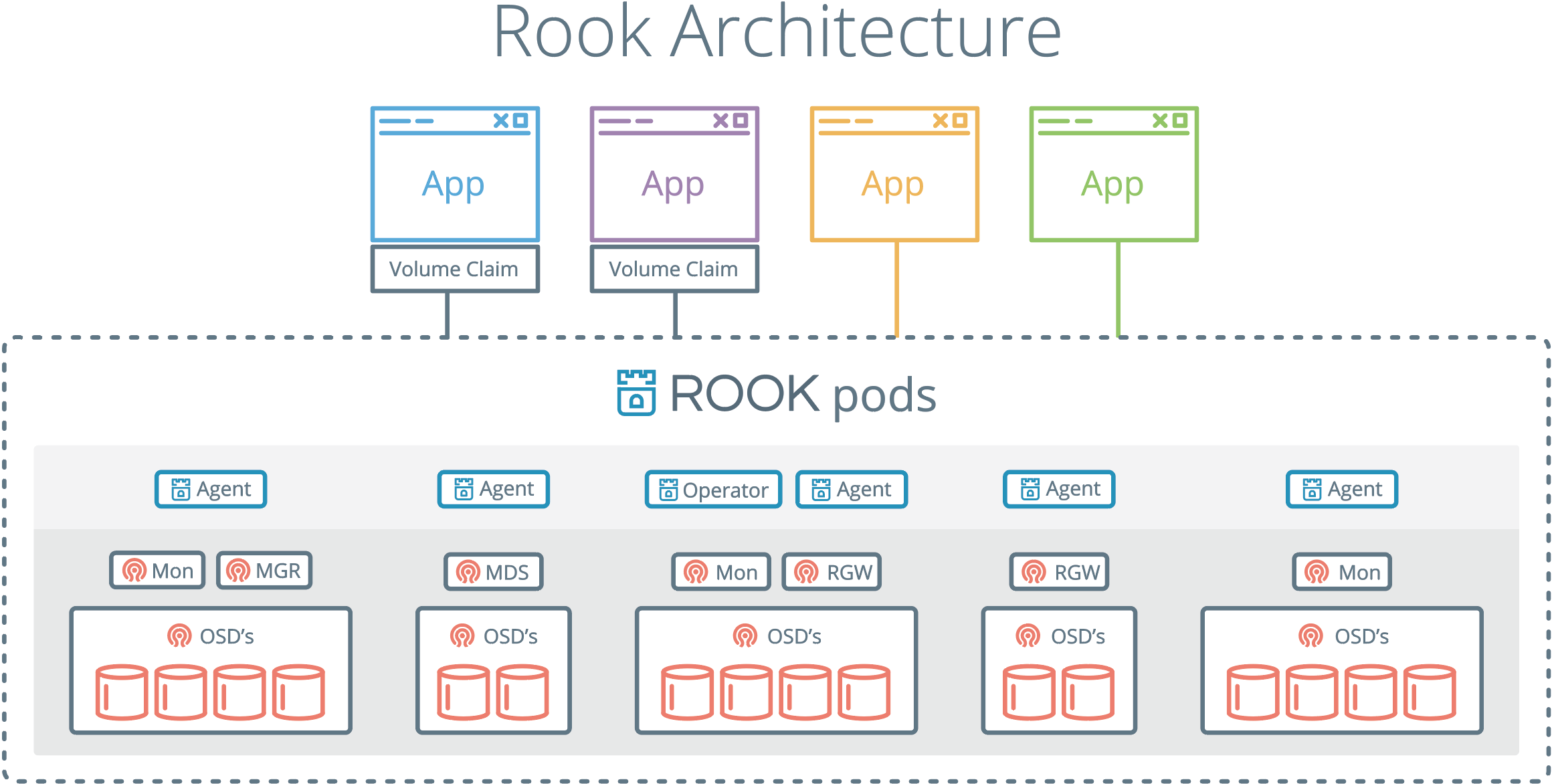

アーキテクチャは以下のようになります。

アプリケーションはPersistent Volume Claimを利用してストレージを利用します。ストレージはRook Operatorで管理を行い、SDSの機能はCephが担います。

Kubernetes上でCephを起動することで、Kubernetesアプリケーションは、Rookによって管理されるblock deviceとfilesystem、あるいはS3/Swift APIを利用したObject Storageを利用することができます。Rook Operatorはstorageコンポーネントに関する設定を自動化したり、クラスターを監視することで、storageを利用可能な状態に維持します。

Rook Operatorはstorageクラスターをブートストラップ・監視するために必要なものを全て備えたコンテナです。 このOperatorは起動すると、Cephストレージを提供するため、Ceph Monitor podとCeph OSD Daemonを監視し、同時に他のCeph daemonも管理します。 Operatorはストレージ Daemonを監視することで、クラスターが正常であることを確認します。Ceph Monitor podは必要に応じて起動・終了され、クラスターの拡大・縮小に応じて他の調整機能が作られます。OperatorはAPIサービスからリクエストされたdesired stateの変更を監視し、その変更を適用することも行います。

Rook Operatorはストレージを利用するために必要なエージェントの初期化も行います。RookはCeph-CSI ドライバーを自動的に設定し、Podに対してストレージをマウントするために利用します。

Rook-Cephデプロイ

それではRookをデプロイします。やり方は公式ページに紹介されていますので、そちらを参照しながら進めます。

Rook Docs - Ceph Storage Quickstart

構築環境

今回Rookをデプロイした環境は以下の通りです。

- 環境:VirtualBox

- OS:CentOS 7.6

- Kubernetesバージョン:v1.15.1

- Kubernetesノード数:Masterノード×1、Workerノード×2

commonリソースデプロイ

まずはcommon.yamlをデプロイします。このyamlファイルには、OperatorとCephクラスターを開始するために必要なリソースが定義されています。Rookを利用するためには、まず最初にcommon.yamlをデプロイする必要があります。

common.yamlには、Rook-Ceph専用のNamespaceやCRD、リソースへアクセスするためのService Account、ClusterRoleBindingなどが定義されています。

デプロイすると、以下のように大量のリソースが作成されます。

[root@kube-master01 ceph]# kubectl apply -f common.yaml namespace/rook-ceph created customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created customresourcedefinition.apiextensions.k8s.io/volumes.rook.io created customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt-rules created role.rbac.authorization.k8s.io/rook-ceph-system created clusterrole.rbac.authorization.k8s.io/rook-ceph-global created clusterrole.rbac.authorization.k8s.io/rook-ceph-global-rules created clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster-rules created clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created serviceaccount/rook-ceph-system created rolebinding.rbac.authorization.k8s.io/rook-ceph-system created clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created serviceaccount/rook-ceph-osd created serviceaccount/rook-ceph-mgr created serviceaccount/rook-ceph-cmd-reporter created role.rbac.authorization.k8s.io/rook-ceph-osd created clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system-rules created role.rbac.authorization.k8s.io/rook-ceph-mgr created role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created podsecuritypolicy.policy/rook-privileged created clusterrole.rbac.authorization.k8s.io/psp:rook created clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system-psp created rolebinding.rbac.authorization.k8s.io/rook-ceph-default-psp created rolebinding.rbac.authorization.k8s.io/rook-ceph-osd-psp created rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-psp created rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter-psp created serviceaccount/rook-csi-cephfs-plugin-sa created serviceaccount/rook-csi-cephfs-provisioner-sa created role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin-rules created clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner-rules created clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-plugin-sa-psp created clusterrolebinding.rbac.authorization.k8s.io/rook-csi-cephfs-provisioner-sa-psp created clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created serviceaccount/rook-csi-rbd-plugin-sa created serviceaccount/rook-csi-rbd-provisioner-sa created role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin-rules created clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner-rules created clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-plugin-sa-psp created clusterrolebinding.rbac.authorization.k8s.io/rook-csi-rbd-provisioner-sa-psp created clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

以下にcommon.yamlも貼っておきます。このファイルは1500行以上もあり、# OLM: BEGIN / ENDという形で何が記載されているかが指定されています。

common.yaml

################################################################################################################### # Create the common resources that are necessary to start the operator and the ceph cluster. # These resources *must* be created before the operator.yaml and cluster.yaml or their variants. # The samples all assume that a single operator will manage a single cluster crd in the same "rook-ceph" namespace. # # If the operator needs to manage multiple clusters (in different namespaces), see the section below # for "cluster-specific resources". The resources below that section will need to be created for each namespace # where the operator needs to manage the cluster. The resources above that section do not be created again. # # Most of the sections are prefixed with a 'OLM' keyword which is used to build our CSV for an OLM (Operator Life Cycle manager) ################################################################################################################### # Namespace where the operator and other rook resources are created apiVersion: v1 kind: Namespace metadata: name: rook-ceph # OLM: BEGIN CEPH CRD # The CRD declarations --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: cephclusters.ceph.rook.io spec: group: ceph.rook.io names: kind: CephCluster listKind: CephClusterList plural: cephclusters singular: cephcluster scope: Namespaced version: v1 validation: openAPIV3Schema: properties: spec: properties: annotations: {} cephVersion: properties: allowUnsupported: type: boolean image: type: string dashboard: properties: enabled: type: boolean urlPrefix: type: string port: type: integer minimum: 0 maximum: 65535 ssl: type: boolean dataDirHostPath: pattern: ^/(\S+) type: string disruptionManagement: properties: machineDisruptionBudgetNamespace: type: string managePodBudgets: type: boolean osdMaintenanceTimeout: type: integer manageMachineDisruptionBudgets: type: boolean skipUpgradeChecks: type: boolean mon: properties: allowMultiplePerNode: type: boolean count: maximum: 9 minimum: 0 type: integer volumeClaimTemplate: {} mgr: properties: modules: items: properties: name: type: string enabled: type: boolean network: properties: hostNetwork: type: boolean provider: type: string selectors: {} storage: properties: disruptionManagement: properties: machineDisruptionBudgetNamespace: type: string managePodBudgets: type: boolean osdMaintenanceTimeout: type: integer manageMachineDisruptionBudgets: type: boolean useAllNodes: type: boolean nodes: items: properties: name: type: string config: properties: metadataDevice: type: string storeType: type: string pattern: ^(filestore|bluestore)$ databaseSizeMB: type: string walSizeMB: type: string journalSizeMB: type: string osdsPerDevice: type: string encryptedDevice: type: string pattern: ^(true|false)$ useAllDevices: type: boolean deviceFilter: {} directories: type: array items: properties: path: type: string devices: type: array items: properties: name: type: string config: {} location: {} resources: {} type: array useAllDevices: type: boolean deviceFilter: {} location: {} directories: type: array items: properties: path: type: string config: {} topologyAware: type: boolean storageClassDeviceSets: {} monitoring: properties: enabled: type: boolean rulesNamespace: type: string rbdMirroring: properties: workers: type: integer external: properties: enable: type: boolean placement: {} resources: {} additionalPrinterColumns: - name: DataDirHostPath type: string description: Directory used on the K8s nodes JSONPath: .spec.dataDirHostPath - name: MonCount type: string description: Number of MONs JSONPath: .spec.mon.count - name: Age type: date JSONPath: .metadata.creationTimestamp - name: State type: string description: Current State JSONPath: .status.state - name: Health type: string description: Ceph Health JSONPath: .status.ceph.health # OLM: END CEPH CRD # OLM: BEGIN CEPH FS CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: cephfilesystems.ceph.rook.io spec: group: ceph.rook.io names: kind: CephFilesystem listKind: CephFilesystemList plural: cephfilesystems singular: cephfilesystem scope: Namespaced version: v1 validation: openAPIV3Schema: properties: spec: properties: metadataServer: properties: activeCount: minimum: 1 maximum: 10 type: integer activeStandby: type: boolean annotations: {} placement: {} resources: {} metadataPool: properties: failureDomain: type: string replicated: properties: size: minimum: 1 maximum: 10 type: integer erasureCoded: properties: dataChunks: type: integer codingChunks: type: integer dataPools: type: array items: properties: failureDomain: type: string replicated: properties: size: minimum: 1 maximum: 10 type: integer erasureCoded: properties: dataChunks: type: integer codingChunks: type: integer additionalPrinterColumns: - name: ActiveMDS type: string description: Number of desired active MDS daemons JSONPath: .spec.metadataServer.activeCount - name: Age type: date JSONPath: .metadata.creationTimestamp # OLM: END CEPH FS CRD # OLM: BEGIN CEPH NFS CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: cephnfses.ceph.rook.io spec: group: ceph.rook.io names: kind: CephNFS listKind: CephNFSList plural: cephnfses singular: cephnfs shortNames: - nfs scope: Namespaced version: v1 validation: openAPIV3Schema: properties: spec: properties: rados: properties: pool: type: string namespace: type: string server: properties: active: type: integer annotations: {} placement: {} resources: {} # OLM: END CEPH NFS CRD # OLM: BEGIN CEPH OBJECT STORE CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: cephobjectstores.ceph.rook.io spec: group: ceph.rook.io names: kind: CephObjectStore listKind: CephObjectStoreList plural: cephobjectstores singular: cephobjectstore scope: Namespaced version: v1 validation: openAPIV3Schema: properties: spec: properties: gateway: properties: type: type: string sslCertificateRef: {} port: type: integer securePort: {} instances: type: integer annotations: {} placement: {} resources: {} metadataPool: properties: failureDomain: type: string replicated: properties: size: type: integer erasureCoded: properties: dataChunks: type: integer codingChunks: type: integer dataPool: properties: failureDomain: type: string replicated: properties: size: type: integer erasureCoded: properties: dataChunks: type: integer codingChunks: type: integer # OLM: END CEPH OBJECT STORE CRD # OLM: BEGIN CEPH OBJECT STORE USERS CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: cephobjectstoreusers.ceph.rook.io spec: group: ceph.rook.io names: kind: CephObjectStoreUser listKind: CephObjectStoreUserList plural: cephobjectstoreusers singular: cephobjectstoreuser shortNames: - rcou - objectuser scope: Namespaced version: v1 # OLM: END CEPH OBJECT STORE USERS CRD # OLM: BEGIN CEPH BLOCK POOL CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: cephblockpools.ceph.rook.io spec: group: ceph.rook.io names: kind: CephBlockPool listKind: CephBlockPoolList plural: cephblockpools singular: cephblockpool scope: Namespaced version: v1 # OLM: END CEPH BLOCK POOL CRD # OLM: BEGIN CEPH VOLUME POOL CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: volumes.rook.io spec: group: rook.io names: kind: Volume listKind: VolumeList plural: volumes singular: volume shortNames: - rv scope: Namespaced version: v1alpha2 # OLM: END CEPH VOLUME POOL CRD # OLM: BEGIN OBJECTBUCKET CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: objectbuckets.objectbucket.io spec: group: objectbucket.io versions: - name: v1alpha1 served: true storage: true names: kind: ObjectBucket listKind: ObjectBucketList plural: objectbuckets singular: objectbucket shortNames: - ob - obs scope: Cluster subresources: status: {} # OLM: END OBJECTBUCKET CRD # OLM: BEGIN OBJECTBUCKETCLAIM CRD --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: objectbucketclaims.objectbucket.io spec: versions: - name: v1alpha1 served: true storage: true group: objectbucket.io names: kind: ObjectBucketClaim listKind: ObjectBucketClaimList plural: objectbucketclaims singular: objectbucketclaim shortNames: - obc - obcs scope: Namespaced subresources: status: {} # OLM: END OBJECTBUCKETCLAIM CRD # OLM: BEGIN OBJECTBUCKET ROLEBINDING --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-object-bucket roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: rook-ceph-object-bucket subjects: - kind: ServiceAccount name: rook-ceph-system namespace: rook-ceph # OLM: END OBJECTBUCKET ROLEBINDING # OLM: BEGIN OPERATOR ROLE --- # The cluster role for managing all the cluster-specific resources in a namespace apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: rook-ceph-cluster-mgmt labels: operator: rook storage-backend: ceph aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-rook-ceph-cluster-mgmt: "true" rules: [] --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: rook-ceph-cluster-mgmt-rules labels: operator: rook storage-backend: ceph rbac.ceph.rook.io/aggregate-to-rook-ceph-cluster-mgmt: "true" rules: - apiGroups: - "" resources: - secrets - pods - pods/log - services - configmaps verbs: - get - list - watch - patch - create - update - delete - apiGroups: - apps resources: - deployments - daemonsets verbs: - get - list - watch - create - update - delete --- # The role for the operator to manage resources in its own namespace apiVersion: rbac.authorization.k8s.io/v1beta1 kind: Role metadata: name: rook-ceph-system namespace: rook-ceph labels: operator: rook storage-backend: ceph rules: - apiGroups: - "" resources: - pods - configmaps - services verbs: - get - list - watch - patch - create - update - delete - apiGroups: - apps resources: - daemonsets - statefulsets - deployments verbs: - get - list - watch - create - update - delete --- # The cluster role for managing the Rook CRDs apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: rook-ceph-global labels: operator: rook storage-backend: ceph aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-rook-ceph-global: "true" rules: [] --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: rook-ceph-global-rules labels: operator: rook storage-backend: ceph rbac.ceph.rook.io/aggregate-to-rook-ceph-global: "true" rules: - apiGroups: - "" resources: # Pod access is needed for fencing - pods # Node access is needed for determining nodes where mons should run - nodes - nodes/proxy verbs: - get - list - watch - apiGroups: - "" resources: - events # PVs and PVCs are managed by the Rook provisioner - persistentvolumes - persistentvolumeclaims - endpoints verbs: - get - list - watch - patch - create - update - delete - apiGroups: - storage.k8s.io resources: - storageclasses verbs: - get - list - watch - apiGroups: - batch resources: - jobs verbs: - get - list - watch - create - update - delete - apiGroups: - ceph.rook.io resources: - "*" verbs: - "*" - apiGroups: - rook.io resources: - "*" verbs: - "*" - apiGroups: - policy - apps resources: #this is for the clusterdisruption controller - poddisruptionbudgets #this is for both clusterdisruption and nodedrain controllers - deployments verbs: - "*" - apiGroups: - healthchecking.openshift.io resources: - machinedisruptionbudgets verbs: - get - list - watch - create - update - delete - apiGroups: - machine.openshift.io resources: - machines verbs: - get - list - watch - create - update - delete --- # Aspects of ceph-mgr that require cluster-wide access kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr-cluster labels: operator: rook storage-backend: ceph aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-rook-ceph-mgr-cluster: "true" rules: [] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr-cluster-rules labels: operator: rook storage-backend: ceph rbac.ceph.rook.io/aggregate-to-rook-ceph-mgr-cluster: "true" rules: - apiGroups: - "" resources: - configmaps - nodes - nodes/proxy verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - list - get - watch --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-object-bucket labels: operator: rook storage-backend: ceph rbac.ceph.rook.io/aggregate-to-rook-ceph-mgr-cluster: "true" rules: - apiGroups: - "" verbs: - "*" resources: - secrets - configmaps - apiGroups: - storage.k8s.io resources: - storageclasses verbs: - get - list - watch - apiGroups: - "objectbucket.io" verbs: - "*" resources: - "*" # OLM: END OPERATOR ROLE # OLM: BEGIN SERVICE ACCOUNT SYSTEM --- # The rook system service account used by the operator, agent, and discovery pods apiVersion: v1 kind: ServiceAccount metadata: name: rook-ceph-system namespace: rook-ceph labels: operator: rook storage-backend: ceph # imagePullSecrets: # - name: my-registry-secret # OLM: END SERVICE ACCOUNT SYSTEM # OLM: BEGIN OPERATOR ROLEBINDING --- # Grant the operator, agent, and discovery agents access to resources in the namespace kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-system namespace: rook-ceph labels: operator: rook storage-backend: ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: rook-ceph-system subjects: - kind: ServiceAccount name: rook-ceph-system namespace: rook-ceph --- # Grant the rook system daemons cluster-wide access to manage the Rook CRDs, PVCs, and storage classes kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-global namespace: rook-ceph labels: operator: rook storage-backend: ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: rook-ceph-global subjects: - kind: ServiceAccount name: rook-ceph-system namespace: rook-ceph # OLM: END OPERATOR ROLEBINDING ################################################################################################################# # Beginning of cluster-specific resources. The example will assume the cluster will be created in the "rook-ceph" # namespace. If you want to create the cluster in a different namespace, you will need to modify these roles # and bindings accordingly. ################################################################################################################# # Service account for the Ceph OSDs. Must exist and cannot be renamed. # OLM: BEGIN SERVICE ACCOUNT OSD --- apiVersion: v1 kind: ServiceAccount metadata: name: rook-ceph-osd namespace: rook-ceph # imagePullSecrets: # - name: my-registry-secret # OLM: END SERVICE ACCOUNT OSD # OLM: BEGIN SERVICE ACCOUNT MGR --- # Service account for the Ceph Mgr. Must exist and cannot be renamed. apiVersion: v1 kind: ServiceAccount metadata: name: rook-ceph-mgr namespace: rook-ceph # imagePullSecrets: # - name: my-registry-secret # OLM: END SERVICE ACCOUNT MGR # OLM: BEGIN CMD REPORTER SERVICE ACCOUNT --- apiVersion: v1 kind: ServiceAccount metadata: name: rook-ceph-cmd-reporter namespace: rook-ceph # OLM: END CMD REPORTER SERVICE ACCOUNT # OLM: BEGIN CLUSTER ROLE --- kind: Role apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-osd namespace: rook-ceph rules: - apiGroups: [""] resources: ["configmaps"] verbs: [ "get", "list", "watch", "create", "update", "delete" ] - apiGroups: ["ceph.rook.io"] resources: ["cephclusters", "cephclusters/finalizers"] verbs: [ "get", "list", "create", "update", "delete" ] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-osd namespace: rook-ceph rules: - apiGroups: - "" resources: - nodes verbs: - get - list --- # Aspects of ceph-mgr that require access to the system namespace kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr-system namespace: rook-ceph aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-rook-ceph-mgr-system: "true" rules: [] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr-system-rules namespace: rook-ceph labels: rbac.ceph.rook.io/aggregate-to-rook-ceph-mgr-system: "true" rules: - apiGroups: - "" resources: - configmaps verbs: - get - list - watch --- # Aspects of ceph-mgr that operate within the cluster's namespace kind: Role apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr namespace: rook-ceph rules: - apiGroups: - "" resources: - pods - services verbs: - get - list - watch - apiGroups: - batch resources: - jobs verbs: - get - list - watch - create - update - delete - apiGroups: - ceph.rook.io resources: - "*" verbs: - "*" # OLM: END CLUSTER ROLE # OLM: BEGIN CMD REPORTER ROLE --- kind: Role apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-cmd-reporter namespace: rook-ceph rules: - apiGroups: - "" resources: - pods - configmaps verbs: - get - list - watch - create - update - delete # OLM: END CMD REPORTER ROLE # OLM: BEGIN CLUSTER ROLEBINDING --- # Allow the operator to create resources in this cluster's namespace kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-cluster-mgmt namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: rook-ceph-cluster-mgmt subjects: - kind: ServiceAccount name: rook-ceph-system namespace: rook-ceph --- # Allow the osd pods in this namespace to work with configmaps kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-osd namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: rook-ceph-osd subjects: - kind: ServiceAccount name: rook-ceph-osd namespace: rook-ceph --- # Allow the ceph mgr to access the cluster-specific resources necessary for the mgr modules kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: rook-ceph-mgr subjects: - kind: ServiceAccount name: rook-ceph-mgr namespace: rook-ceph --- # Allow the ceph mgr to access the rook system resources necessary for the mgr modules kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr-system namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: rook-ceph-mgr-system subjects: - kind: ServiceAccount name: rook-ceph-mgr namespace: rook-ceph --- # Allow the ceph mgr to access cluster-wide resources necessary for the mgr modules kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-mgr-cluster roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: rook-ceph-mgr-cluster subjects: - kind: ServiceAccount name: rook-ceph-mgr namespace: rook-ceph --- # Allow the ceph osd to access cluster-wide resources necessary for determining their topology location kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-osd roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: rook-ceph-osd subjects: - kind: ServiceAccount name: rook-ceph-osd namespace: rook-ceph # OLM: END CLUSTER ROLEBINDING # OLM: BEGIN CMD REPORTER ROLEBINDING --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: rook-ceph-cmd-reporter namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: rook-ceph-cmd-reporter subjects: - kind: ServiceAccount name: rook-ceph-cmd-reporter namespace: rook-ceph # OLM: END CMD REPORTER ROLEBINDING ################################################################################################################# # Beginning of pod security policy resources. The example will assume the cluster will be created in the # "rook-ceph" namespace. If you want to create the cluster in a different namespace, you will need to modify # the roles and bindings accordingly. ################################################################################################################# # OLM: BEGIN CLUSTER POD SECURITY POLICY --- apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: rook-privileged spec: privileged: true allowedCapabilities: # required by CSI - SYS_ADMIN # fsGroup - the flexVolume agent has fsGroup capabilities and could potentially be any group fsGroup: rule: RunAsAny # runAsUser, supplementalGroups - Rook needs to run some pods as root # Ceph pods could be run as the Ceph user, but that user isn't always known ahead of time runAsUser: rule: RunAsAny supplementalGroups: rule: RunAsAny # seLinux - seLinux context is unknown ahead of time; set if this is well-known seLinux: rule: RunAsAny volumes: # recommended minimum set - configMap - downwardAPI - emptyDir - persistentVolumeClaim - secret - projected # required for Rook - hostPath - flexVolume # allowedHostPaths can be set to Rook's known host volume mount points when they are fully-known # directory-based OSDs make this hard to nail down # allowedHostPaths: # - pathPrefix: "/run/udev" # for OSD prep # readOnly: false # - pathPrefix: "/dev" # for OSD prep # readOnly: false # - pathPrefix: "/var/lib/rook" # or whatever the dataDirHostPath value is set to # readOnly: false # Ceph requires host IPC for setting up encrypted devices hostIPC: true # Ceph OSDs need to share the same PID namespace hostPID: true # hostNetwork can be set to 'false' if host networking isn't used hostNetwork: true hostPorts: # Ceph messenger protocol v1 - min: 6789 max: 6790 # <- support old default port # Ceph messenger protocol v2 - min: 3300 max: 3300 # Ceph RADOS ports for OSDs, MDSes - min: 6800 max: 7300 # # Ceph dashboard port HTTP (not recommended) # - min: 7000 # max: 7000 # Ceph dashboard port HTTPS - min: 8443 max: 8443 # Ceph mgr Prometheus Metrics - min: 9283 max: 9283 # OLM: END CLUSTER POD SECURITY POLICY # OLM: BEGIN POD SECURITY POLICY BINDINGS --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: 'psp:rook' rules: - apiGroups: - policy resources: - podsecuritypolicies resourceNames: - rook-privileged verbs: - use --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: rook-ceph-system-psp roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: 'psp:rook' subjects: - kind: ServiceAccount name: rook-ceph-system namespace: rook-ceph --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: rook-ceph-default-psp namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: psp:rook subjects: - kind: ServiceAccount name: default namespace: rook-ceph --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: rook-ceph-osd-psp namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: psp:rook subjects: - kind: ServiceAccount name: rook-ceph-osd namespace: rook-ceph --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: rook-ceph-mgr-psp namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: psp:rook subjects: - kind: ServiceAccount name: rook-ceph-mgr namespace: rook-ceph --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: rook-ceph-cmd-reporter-psp namespace: rook-ceph roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: psp:rook subjects: - kind: ServiceAccount name: rook-ceph-cmd-reporter namespace: rook-ceph # OLM: END CLUSTER POD SECURITY POLICY BINDINGS # OLM: BEGIN CSI CEPHFS SERVICE ACCOUNT --- apiVersion: v1 kind: ServiceAccount metadata: name: rook-csi-cephfs-plugin-sa namespace: rook-ceph --- apiVersion: v1 kind: ServiceAccount metadata: name: rook-csi-cephfs-provisioner-sa namespace: rook-ceph # OLM: END CSI CEPHFS SERVICE ACCOUNT # OLM: BEGIN CSI CEPHFS ROLE --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: rook-ceph name: cephfs-external-provisioner-cfg rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "watch", "list", "delete", "update", "create"] - apiGroups: [""] resources: ["configmaps"] verbs: ["get", "list", "create", "delete"] - apiGroups: ["coordination.k8s.io"] resources: ["leases"] verbs: ["get", "watch", "list", "delete", "update", "create"] # OLM: END CSI CEPHFS ROLE # OLM: BEGIN CSI CEPHFS ROLEBINDING --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-csi-provisioner-role-cfg namespace: rook-ceph subjects: - kind: ServiceAccount name: rook-csi-cephfs-provisioner-sa namespace: rook-ceph roleRef: kind: Role name: cephfs-external-provisioner-cfg apiGroup: rbac.authorization.k8s.io # OLM: END CSI CEPHFS ROLEBINDING # OLM: BEGIN CSI CEPHFS CLUSTER ROLE --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-csi-nodeplugin aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-cephfs-csi-nodeplugin: "true" rules: [] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-csi-nodeplugin-rules labels: rbac.ceph.rook.io/aggregate-to-cephfs-csi-nodeplugin: "true" rules: - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "update"] - apiGroups: [""] resources: ["namespaces"] verbs: ["get", "list"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["volumeattachments"] verbs: ["get", "list", "watch", "update"] - apiGroups: [""] resources: ["configmaps"] verbs: ["get", "list"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-external-provisioner-runner aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-cephfs-external-provisioner-runner: "true" rules: [] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-external-provisioner-runner-rules labels: rbac.ceph.rook.io/aggregate-to-cephfs-external-provisioner-runner: "true" rules: - apiGroups: [""] resources: ["secrets"] verbs: ["get", "list"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete", "update"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["list", "watch", "create", "update", "patch"] - apiGroups: ["storage.k8s.io"] resources: ["volumeattachments"] verbs: ["get", "list", "watch", "update"] - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "watch"] # OLM: END CSI CEPHFS CLUSTER ROLE # OLM: BEGIN CSI CEPHFS CLUSTER ROLEBINDING --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: rook-csi-cephfs-plugin-sa-psp roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: 'psp:rook' subjects: - kind: ServiceAccount name: rook-csi-cephfs-plugin-sa namespace: rook-ceph --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: rook-csi-cephfs-provisioner-sa-psp roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: 'psp:rook' subjects: - kind: ServiceAccount name: rook-csi-cephfs-provisioner-sa namespace: rook-ceph --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-csi-nodeplugin subjects: - kind: ServiceAccount name: rook-csi-cephfs-plugin-sa namespace: rook-ceph roleRef: kind: ClusterRole name: cephfs-csi-nodeplugin apiGroup: rbac.authorization.k8s.io --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: cephfs-csi-provisioner-role subjects: - kind: ServiceAccount name: rook-csi-cephfs-provisioner-sa namespace: rook-ceph roleRef: kind: ClusterRole name: cephfs-external-provisioner-runner apiGroup: rbac.authorization.k8s.io # OLM: END CSI CEPHFS CLUSTER ROLEBINDING # OLM: BEGIN CSI RBD SERVICE ACCOUNT --- apiVersion: v1 kind: ServiceAccount metadata: name: rook-csi-rbd-plugin-sa namespace: rook-ceph --- apiVersion: v1 kind: ServiceAccount metadata: name: rook-csi-rbd-provisioner-sa namespace: rook-ceph # OLM: END CSI RBD SERVICE ACCOUNT # OLM: BEGIN CSI RBD ROLE --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: rook-ceph name: rbd-external-provisioner-cfg rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "watch", "list", "delete", "update", "create"] - apiGroups: [""] resources: ["configmaps"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: ["coordination.k8s.io"] resources: ["leases"] verbs: ["get", "watch", "list", "delete", "update", "create"] # OLM: END CSI RBD ROLE # OLM: BEGIN CSI RBD ROLEBINDING --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-csi-provisioner-role-cfg namespace: rook-ceph subjects: - kind: ServiceAccount name: rook-csi-rbd-provisioner-sa namespace: rook-ceph roleRef: kind: Role name: rbd-external-provisioner-cfg apiGroup: rbac.authorization.k8s.io # OLM: END CSI RBD ROLEBINDING # OLM: BEGIN CSI RBD CLUSTER ROLE --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-csi-nodeplugin aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-rbd-csi-nodeplugin: "true" rules: [] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-csi-nodeplugin-rules labels: rbac.ceph.rook.io/aggregate-to-rbd-csi-nodeplugin: "true" rules: - apiGroups: [""] resources: ["secrets"] verbs: ["get", "list"] - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "update"] - apiGroups: [""] resources: ["namespaces"] verbs: ["get", "list"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["volumeattachments"] verbs: ["get", "list", "watch", "update"] - apiGroups: [""] resources: ["configmaps"] verbs: ["get", "list"] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-external-provisioner-runner aggregationRule: clusterRoleSelectors: - matchLabels: rbac.ceph.rook.io/aggregate-to-rbd-external-provisioner-runner: "true" rules: [] --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-external-provisioner-runner-rules labels: rbac.ceph.rook.io/aggregate-to-rbd-external-provisioner-runner: "true" rules: - apiGroups: [""] resources: ["secrets"] verbs: ["get", "list"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete", "update"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["volumeattachments"] verbs: ["get", "list", "watch", "update"] - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "watch"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["list", "watch", "create", "update", "patch"] - apiGroups: ["snapshot.storage.k8s.io"] resources: ["volumesnapshots"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["snapshot.storage.k8s.io"] resources: ["volumesnapshotcontents"] verbs: ["create", "get", "list", "watch", "update", "delete"] - apiGroups: ["snapshot.storage.k8s.io"] resources: ["volumesnapshotclasses"] verbs: ["get", "list", "watch"] - apiGroups: ["apiextensions.k8s.io"] resources: ["customresourcedefinitions"] verbs: ["create", "list", "watch", "delete", "get", "update"] - apiGroups: ["snapshot.storage.k8s.io"] resources: ["volumesnapshots/status"] verbs: ["update"] # OLM: END CSI RBD CLUSTER ROLE # OLM: BEGIN CSI RBD CLUSTER ROLEBINDING --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: rook-csi-rbd-plugin-sa-psp roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: 'psp:rook' subjects: - kind: ServiceAccount name: rook-csi-rbd-plugin-sa namespace: rook-ceph --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: rook-csi-rbd-provisioner-sa-psp roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: 'psp:rook' subjects: - kind: ServiceAccount name: rook-csi-rbd-provisioner-sa namespace: rook-ceph --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-csi-nodeplugin subjects: - kind: ServiceAccount name: rook-csi-rbd-plugin-sa namespace: rook-ceph roleRef: kind: ClusterRole name: rbd-csi-nodeplugin apiGroup: rbac.authorization.k8s.io --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: rbd-csi-provisioner-role subjects: - kind: ServiceAccount name: rook-csi-rbd-provisioner-sa namespace: rook-ceph roleRef: kind: ClusterRole name: rbd-external-provisioner-runner apiGroup: rbac.authorization.k8s.io # OLM: END CSI RBD CLUSTER ROLEBINDING

Operatorデプロイ

次にoperator.yamlをデプロイします。

[root@kube-master01 ceph]# kubectl apply -f operator.yaml deployment.apps/rook-ceph-operator created [root@kube-master01 ceph]# kubectl -n rook-ceph get pods NAME READY STATUS RESTARTS AGE rook-ceph-operator-54665977bf-2k748 1/1 Running 0 2m56s rook-discover-944s2 1/1 Running 0 104s rook-discover-zc2sb 1/1 Running 0 104s [root@kube-master01 ceph]# kubectl -n rook-ceph get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES rook-ceph-operator-54665977bf-2k748 1/1 Running 0 3m11s 192.168.132.56 kube-worker01 <none> <none> rook-discover-944s2 1/1 Running 0 119s 192.168.247.223 kube-worker02 <none> <none> rook-discover-zc2sb 1/1 Running 0 119s 192.168.132.7 kube-worker01 <none> <none> [root@kube-master01 ceph]#

Operator Podに加え、rook-discover Podもデプロイされます。rook-discover Podはストレージノード(ここではworkerノード)に接続されたストレージデバイスの検知を行います。

operator.yamlもこちらに貼っておきます。

operator.yaml

################################################################################################################# # The deployment for the rook operator # Contains the common settings for most Kubernetes deployments. # For example, to create the rook-ceph cluster: # kubectl create -f common.yaml # kubectl create -f operator.yaml # kubectl create -f cluster.yaml # # Also see other operator sample files for variations of operator.yaml: # - operator-openshift.yaml: Common settings for running in OpenShift ################################################################################################################# # OLM: BEGIN OPERATOR DEPLOYMENT apiVersion: apps/v1 kind: Deployment metadata: name: rook-ceph-operator namespace: rook-ceph labels: operator: rook storage-backend: ceph spec: selector: matchLabels: app: rook-ceph-operator replicas: 1 template: metadata: labels: app: rook-ceph-operator spec: serviceAccountName: rook-ceph-system containers: - name: rook-ceph-operator image: rook/ceph:master args: ["ceph", "operator"] volumeMounts: - mountPath: /var/lib/rook name: rook-config - mountPath: /etc/ceph name: default-config-dir env: # If the operator should only watch for cluster CRDs in the same namespace, set this to "true". # If this is not set to true, the operator will watch for cluster CRDs in all namespaces. - name: ROOK_CURRENT_NAMESPACE_ONLY value: "false" # To disable RBAC, uncomment the following: # - name: RBAC_ENABLED # value: "false" # Rook Agent toleration. Will tolerate all taints with all keys. # Choose between NoSchedule, PreferNoSchedule and NoExecute: # - name: AGENT_TOLERATION # value: "NoSchedule" # (Optional) Rook Agent toleration key. Set this to the key of the taint you want to tolerate # - name: AGENT_TOLERATION_KEY # value: "<KeyOfTheTaintToTolerate>" # (Optional) Rook Agent tolerations list. Put here list of taints you want to tolerate in YAML format. # - name: AGENT_TOLERATIONS # value: | # - effect: NoSchedule # key: node-role.kubernetes.io/controlplane # operator: Exists # - effect: NoExecute # key: node-role.kubernetes.io/etcd # operator: Exists # (Optional) Rook Agent NodeAffinity. # - name: AGENT_NODE_AFFINITY # value: "role=storage-node; storage=rook,ceph" # (Optional) Rook Agent mount security mode. Can by `Any` or `Restricted`. # `Any` uses Ceph admin credentials by default/fallback. # For using `Restricted` you must have a Ceph secret in each namespace storage should be consumed from and # set `mountUser` to the Ceph user, `mountSecret` to the Kubernetes secret name. # to the namespace in which the `mountSecret` Kubernetes secret namespace. # - name: AGENT_MOUNT_SECURITY_MODE # value: "Any" # Set the path where the Rook agent can find the flex volumes # - name: FLEXVOLUME_DIR_PATH # value: "<PathToFlexVolumes>" # Set the path where kernel modules can be found # - name: LIB_MODULES_DIR_PATH # value: "<PathToLibModules>" # Mount any extra directories into the agent container # - name: AGENT_MOUNTS # value: "somemount=/host/path:/container/path,someothermount=/host/path2:/container/path2" # Rook Discover toleration. Will tolerate all taints with all keys. # Choose between NoSchedule, PreferNoSchedule and NoExecute: # - name: DISCOVER_TOLERATION # value: "NoSchedule" # (Optional) Rook Discover toleration key. Set this to the key of the taint you want to tolerate # - name: DISCOVER_TOLERATION_KEY # value: "<KeyOfTheTaintToTolerate>" # (Optional) Rook Discover tolerations list. Put here list of taints you want to tolerate in YAML format. # - name: DISCOVER_TOLERATIONS # value: | # - effect: NoSchedule # key: node-role.kubernetes.io/controlplane # operator: Exists # - effect: NoExecute # key: node-role.kubernetes.io/etcd # operator: Exists # (Optional) Discover Agent NodeAffinity. # - name: DISCOVER_AGENT_NODE_AFFINITY # value: "role=storage-node; storage=rook, ceph" # Allow rook to create multiple file systems. Note: This is considered # an experimental feature in Ceph as described at # http://docs.ceph.com/docs/master/cephfs/experimental-features/#multiple-filesystems-within-a-ceph-cluster # which might cause mons to crash as seen in https://github.com/rook/rook/issues/1027 - name: ROOK_ALLOW_MULTIPLE_FILESYSTEMS value: "false" # The logging level for the operator: INFO | DEBUG - name: ROOK_LOG_LEVEL value: "INFO" # The interval to check the health of the ceph cluster and update the status in the custom resource. - name: ROOK_CEPH_STATUS_CHECK_INTERVAL value: "60s" # The interval to check if every mon is in the quorum. - name: ROOK_MON_HEALTHCHECK_INTERVAL value: "45s" # The duration to wait before trying to failover or remove/replace the # current mon with a new mon (useful for compensating flapping network). - name: ROOK_MON_OUT_TIMEOUT value: "600s" # The duration between discovering devices in the rook-discover daemonset. - name: ROOK_DISCOVER_DEVICES_INTERVAL value: "60m" # Whether to start pods as privileged that mount a host path, which includes the Ceph mon and osd pods. # This is necessary to workaround the anyuid issues when running on OpenShift. # For more details see https://github.com/rook/rook/issues/1314#issuecomment-355799641 - name: ROOK_HOSTPATH_REQUIRES_PRIVILEGED value: "false" # In some situations SELinux relabelling breaks (times out) on large filesystems, and doesn't work with cephfs ReadWriteMany volumes (last relabel wins). # Disable it here if you have similar issues. # For more details see https://github.com/rook/rook/issues/2417 - name: ROOK_ENABLE_SELINUX_RELABELING value: "true" # In large volumes it will take some time to chown all the files. Disable it here if you have performance issues. # For more details see https://github.com/rook/rook/issues/2254 - name: ROOK_ENABLE_FSGROUP value: "true" # Disable automatic orchestration when new devices are discovered - name: ROOK_DISABLE_DEVICE_HOTPLUG value: "false" # Whether to enable the flex driver. By default it is enabled and is fully supported, but will be deprecated in some future release # in favor of the CSI driver. - name: ROOK_ENABLE_FLEX_DRIVER value: "false" # Whether to start the discovery daemon to watch for raw storage devices on nodes in the cluster. # This daemon does not need to run if you are only going to create your OSDs based on StorageClassDeviceSets with PVCs. - name: ROOK_ENABLE_DISCOVERY_DAEMON value: "true" # Enable the default version of the CSI CephFS driver. To start another version of the CSI driver, see image properties below. - name: ROOK_CSI_ENABLE_CEPHFS value: "true" # Enable the default version of the CSI RBD driver. To start another version of the CSI driver, see image properties below. - name: ROOK_CSI_ENABLE_RBD value: "true" - name: ROOK_CSI_ENABLE_GRPC_METRICS value: "true" # The default version of CSI supported by Rook will be started. To change the version # of the CSI driver to something other than what is officially supported, change # these images to the desired release of the CSI driver. #- name: ROOK_CSI_CEPH_IMAGE # value: "quay.io/cephcsi/cephcsi:v1.2.1" #- name: ROOK_CSI_REGISTRAR_IMAGE # value: "quay.io/k8scsi/csi-node-driver-registrar:v1.1.0" #- name: ROOK_CSI_PROVISIONER_IMAGE # value: "quay.io/k8scsi/csi-provisioner:v1.3.0" #- name: ROOK_CSI_SNAPSHOTTER_IMAGE # value: "quay.io/k8scsi/csi-snapshotter:v1.2.0" #- name: ROOK_CSI_ATTACHER_IMAGE # value: "quay.io/k8scsi/csi-attacher:v1.2.0" # kubelet directory path, if kubelet configured to use other than /var/lib/kubelet path. #- name: ROOK_CSI_KUBELET_DIR_PATH # value: "/var/lib/kubelet" # (Optional) Ceph Provisioner NodeAffinity. # - name: CSI_PROVISIONER_NODE_AFFINITY # value: "role=storage-node; storage=rook, ceph" # (Optional) CEPH CSI provisioner tolerations list. Put here list of taints you want to tolerate in YAML format. # CSI provisioner would be best to start on the same nodes as other ceph daemons. # - name: CSI_PROVISIONER_TOLERATIONS # value: | # - effect: NoSchedule # key: node-role.kubernetes.io/controlplane # operator: Exists # - effect: NoExecute # key: node-role.kubernetes.io/etcd # operator: Exists # (Optional) Ceph CSI plugin NodeAffinity. # - name: CSI_PLUGIN_NODE_AFFINITY # value: "role=storage-node; storage=rook, ceph" # (Optional) CEPH CSI plugin tolerations list. Put here list of taints you want to tolerate in YAML format. # CSI plugins need to be started on all the nodes where the clients need to mount the storage. # - name: CSI_PLUGIN_TOLERATIONS # value: | # - effect: NoSchedule # key: node-role.kubernetes.io/controlplane # operator: Exists # - effect: NoExecute # key: node-role.kubernetes.io/etcd # operator: Exists # Configure CSI cephfs grpc and liveness metrics port #- name: CSI_CEPHFS_GRPC_METRICS_PORT # value: "9091" #- name: CSI_CEPHFS_LIVENESS_METRICS_PORT # value: "9081" # Configure CSI rbd grpc and liveness metrics port #- name: CSI_RBD_GRPC_METRICS_PORT # value: "9090" #- name: CSI_RBD_LIVENESS_METRICS_PORT # value: "9080" # The name of the node to pass with the downward API - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName # The pod name to pass with the downward API - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name # The pod namespace to pass with the downward API - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumes: - name: rook-config emptyDir: {} - name: default-config-dir emptyDir: {} # OLM: END OPERATOR DEPLOYMENT

Cluster CRDデプロイ

続いてRook-Cephクラスターの設定を定義するcluster-test.yamlをデプロイします。これはテスト環境向けであり、本番環境ではcluster.yamlを利用します。

# cluster-test.yamlをデプロイ [root@kube-master01 ceph]# kubectl apply -f cluster-test.yaml cephcluster.ceph.rook.io/rook-ceph created [root@kube-master01 ceph]# # デプロイ直後の状態 [root@kube-master01 ceph]# kubectl get pods -n rook-ceph NAME READY STATUS RESTARTS AGE csi-cephfsplugin-bkk66 0/3 ContainerCreating 0 11s csi-cephfsplugin-provisioner-974b566d9-9ht4p 0/4 ContainerCreating 0 11s csi-cephfsplugin-provisioner-974b566d9-bkhnx 0/4 ContainerCreating 0 11s csi-cephfsplugin-qn8tg 0/3 ContainerCreating 0 11s csi-rbdplugin-2h4rp 0/3 ContainerCreating 0 11s csi-rbdplugin-9mthf 0/3 ContainerCreating 0 11s csi-rbdplugin-provisioner-64f5b7db8f-ldm8k 0/5 ContainerCreating 0 11s csi-rbdplugin-provisioner-64f5b7db8f-mtl8q 0/5 ContainerCreating 0 11s rook-ceph-detect-version-ff7pd 0/1 PodInitializing 0 5s rook-ceph-operator-54665977bf-2k748 1/1 Running 0 9m35s rook-discover-944s2 1/1 Running 0 8m23s rook-discover-zc2sb 1/1 Running 0 8m23s # リソース作成途中(OSDが作成されていない) [root@kube-master01 ceph]# kubectl get pods -n rook-ceph -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES csi-cephfsplugin-bkk66 3/3 Running 0 2m14s 172.16.33.20 kube-worker01 <none> <none> csi-cephfsplugin-provisioner-974b566d9-9ht4p 4/4 Running 0 2m14s 192.168.247.224 kube-worker02 <none> <none> csi-cephfsplugin-provisioner-974b566d9-bkhnx 4/4 Running 0 2m14s 192.168.132.62 kube-worker01 <none> <none> csi-cephfsplugin-qn8tg 3/3 Running 0 2m14s 172.16.33.30 kube-worker02 <none> <none> csi-rbdplugin-2h4rp 3/3 Running 0 2m14s 172.16.33.20 kube-worker01 <none> <none> csi-rbdplugin-9mthf 3/3 Running 0 2m14s 172.16.33.30 kube-worker02 <none> <none> csi-rbdplugin-provisioner-64f5b7db8f-ldm8k 5/5 Running 0 2m14s 192.168.247.222 kube-worker02 <none> <none> csi-rbdplugin-provisioner-64f5b7db8f-mtl8q 5/5 Running 0 2m14s 192.168.132.61 kube-worker01 <none> <none> rook-ceph-mgr-a-74db8dcf87-pn78m 1/1 Running 0 13s 192.168.247.232 kube-worker02 <none> <none> rook-ceph-mon-a-6c6b4cd4b6-tmsmj 1/1 Running 0 35s 192.168.247.220 kube-worker02 <none> <none> rook-ceph-operator-54665977bf-2k748 1/1 Running 0 11m 192.168.132.56 kube-worker01 <none> <none> rook-discover-944s2 1/1 Running 0 10m 192.168.247.223 kube-worker02 <none> <none> rook-discover-zc2sb 1/1 Running 0 10m 192.168.132.7 kube-worker01 <none> <none> [root@kube-master01 ceph]# # リソース作成完了後 [root@kube-master01 ceph]# kubectl get pods -n rook-ceph -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES csi-cephfsplugin-bkk66 3/3 Running 0 8m30s 172.16.33.20 kube-worker01 <none> <none> csi-cephfsplugin-provisioner-974b566d9-9ht4p 4/4 Running 0 8m30s 192.168.247.224 kube-worker02 <none> <none> csi-cephfsplugin-provisioner-974b566d9-bkhnx 4/4 Running 0 8m30s 192.168.132.62 kube-worker01 <none> <none> csi-cephfsplugin-qn8tg 3/3 Running 0 8m30s 172.16.33.30 kube-worker02 <none> <none> csi-rbdplugin-2h4rp 3/3 Running 0 8m30s 172.16.33.20 kube-worker01 <none> <none> csi-rbdplugin-9mthf 3/3 Running 0 8m30s 172.16.33.30 kube-worker02 <none> <none> csi-rbdplugin-provisioner-64f5b7db8f-ldm8k 5/5 Running 0 8m30s 192.168.247.222 kube-worker02 <none> <none> csi-rbdplugin-provisioner-64f5b7db8f-mtl8q 5/5 Running 0 8m30s 192.168.132.61 kube-worker01 <none> <none> rook-ceph-mgr-a-74db8dcf87-pn78m 1/1 Running 0 6m29s 192.168.247.232 kube-worker02 <none> <none> rook-ceph-mon-a-6c6b4cd4b6-tmsmj 1/1 Running 0 6m51s 192.168.247.220 kube-worker02 <none> <none> rook-ceph-operator-54665977bf-2k748 1/1 Running 0 17m 192.168.132.56 kube-worker01 <none> <none> rook-ceph-osd-0-5f5c4cc499-2dxm6 1/1 Running 0 5m45s 192.168.247.229 kube-worker02 <none> <none> rook-ceph-osd-1-6c669dd464-pxmfr 1/1 Running 0 5m43s 192.168.132.2 kube-worker01 <none> <none> rook-ceph-osd-prepare-kube-worker01-cfwxd 0/1 Completed 0 5m55s 192.168.132.9 kube-worker01 <none> <none> rook-ceph-osd-prepare-kube-worker02-5rgln 0/1 Completed 0 5m54s 192.168.247.233 kube-worker02 <none> <none> rook-discover-944s2 1/1 Running 0 16m 192.168.247.223 kube-worker02 <none> <none> rook-discover-zc2sb 1/1 Running 0 16m 192.168.132.7 kube-worker01 <none> <none> [root@kube-master01 ceph]#

様々なPodが作成されましたが、Ceph Monitor Pod(rook-ceph-mon Pod)、OSD(rook-ceph-osd Pod)、CephFS関連(csi-cephfsplugin Pod)、RBD関連(csi-rbdplugin Pod)に加え、rook-ceph-mgrというPodも作成されています。これはCeph Manager Daemonというコンポーネントで、外部モニタリング・管理システム向けに拡張的な監視・インターフェイスを提供するために存在します。

cluster-test.yamlも貼っておきます。

cluster-test.yaml

################################################################################################################# # Define the settings for the rook-ceph cluster with settings that should only be used in a test environment. # A single filestore OSD will be created in the dataDirHostPath. # For example, to create the cluster: # kubectl create -f common.yaml # kubectl create -f operator.yaml # kubectl create -f cluster-test.yaml ################################################################################################################# apiVersion: ceph.rook.io/v1 kind: CephCluster metadata: name: rook-ceph namespace: rook-ceph spec: cephVersion: image: ceph/ceph:v14.2.4-20190917 allowUnsupported: true dataDirHostPath: /var/lib/rook skipUpgradeChecks: false mon: count: 1 allowMultiplePerNode: true dashboard: enabled: true ssl: true monitoring: enabled: false # requires Prometheus to be pre-installed rulesNamespace: rook-ceph network: hostNetwork: false rbdMirroring: workers: 0 mgr: modules: # the pg_autoscaler is only available on nautilus or newer. remove this if testing mimic. - name: pg_autoscaler enabled: true storage: useAllNodes: true useAllDevices: false deviceFilter: config: databaseSizeMB: "1024" # this value can be removed for environments with normal sized disks (100 GB or larger) journalSizeMB: "1024" # this value can be removed for environments with normal sized disks (20 GB or larger) osdsPerDevice: "1" # this value can be overridden at the node or device level directories: - path: /var/lib/rook # nodes: # - name: "minikube" # directories: # - path: "/data/rook-dir" # devices: # - name: "sdb" # - name: "nvme01" # multiple osds can be created on high performance devices # config: # osdsPerDevice: "5"

Toolboxデプロイ

ここまででRookを利用するための準備はできました。RookではToolboxとして、Rookクラスターのデバッグ・トラブルシューティング用のリソースを準備しています。ここではtoolbox.yamlをデプロイしてToolbox Podを利用できるようにします。

[root@kube-master01 ceph]# kubectl apply -f toolbox.yaml deployment.apps/rook-ceph-tools created [root@kube-master01 ceph]# [root@kube-master01 ceph]# kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" NAME READY STATUS RESTARTS AGE rook-ceph-tools-7cf4cc7568-pjh48 1/1 Running 0 45s [root@kube-master01 ceph]#

Toolboxを利用するには、rook-ceph-tools Podからcephコマンドを利用します。ここではいくつかのコマンドを実行します。

# Cephクラスターの状態確認 (ceph statusコマンド)

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph status

cluster:

id: a6d2d8ec-3050-4dac-a9b6-f3521e476e3e

health: HEALTH_OK

services:

mon: 1 daemons, quorum a (age 2m)

mgr: a(active, since 67s)

osd: 2 osds: 2 up (since 2m), 2 in (since 32h)

data:

pools: 1 pools, 8 pgs

objects: 8 objects, 35 B

usage: 26 GiB used, 49 GiB / 75 GiB avail

pgs: 8 active+clean

[root@kube-master01 ceph]#

# クラスターのHealthチェック (ceph healthコマンド)

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph health

HEALTH_OK

[root@kube-master01 ceph]#

# Cephのデータ使用量確認 (ceph dfコマンド)

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 75 GiB 49 GiB 26 GiB 26 GiB 34.08

TOTAL 75 GiB 49 GiB 26 GiB 26 GiB 34.08

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

replicapool 1 35 B 8 35 B 0 19 GiB

[root@kube-master01 ceph]#

# Ceph Monitorの状態確認 (ceph mon statコマンド)

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph mon stat

e1: 1 mons at {a=[v2:10.104.28.68:3300/0,v1:10.104.28.68:6789/0]}, election epoch 19, leader 0 a, quorum 0 a

[root@kube-master01 ceph]#

# OSDの状態確認 (ceph osd statコマンド)

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd stat

2 osds: 2 up (since 52m), 2 in (since 32h); epoch: e86

[root@kube-master01 ceph]#

# OSDの詳細な状態確認 (ceph osd statusコマンド)

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd status

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | kube-worker02 | 16.2G | 21.1G | 0 | 0 | 0 | 0 | exists,up |

| 1 | kube-worker01 | 9435M | 28.2G | 0 | 0 | 0 | 0 | exists,up |

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

[root@kube-master01 ceph]#

# OSD mapをツリー上に表示 (ceph osd treeコマンド)

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07318 root default

-3 0.03659 host kube-worker01

1 hdd 0.03659 osd.1 up 1.00000 1.00000

-2 0.03659 host kube-worker02

0 hdd 0.03659 osd.0 up 1.00000 1.00000

[root@kube-master01 ceph]#

toolbox.yamlも載せておきます。

toolbox.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: rook-ceph-tools namespace: rook-ceph labels: app: rook-ceph-tools spec: replicas: 1 selector: matchLabels: app: rook-ceph-tools template: metadata: labels: app: rook-ceph-tools spec: dnsPolicy: ClusterFirstWithHostNet containers: - name: rook-ceph-tools image: rook/ceph:master command: ["/tini"] args: ["-g", "--", "/usr/local/bin/toolbox.sh"] imagePullPolicy: IfNotPresent env: - name: ROOK_ADMIN_SECRET valueFrom: secretKeyRef: name: rook-ceph-mon key: admin-secret securityContext: privileged: true volumeMounts: - mountPath: /dev name: dev - mountPath: /sys/bus name: sysbus - mountPath: /lib/modules name: libmodules - name: mon-endpoint-volume mountPath: /etc/rook # if hostNetwork: false, the "rbd map" command hangs, see https://github.com/rook/rook/issues/2021 hostNetwork: true volumes: - name: dev hostPath: path: /dev - name: sysbus hostPath: path: /sys/bus - name: libmodules hostPath: path: /lib/modules - name: mon-endpoint-volume configMap: name: rook-ceph-mon-endpoints items: - key: data path: mon-endpoints

Storageデプロイ

ここまででRook Operatorを利用する準備が整いました。ここからはCephの利用準備を行います。Cephはファイルストレージ・ブロックストレージ・オブジェクトストレージの3種類のストレージを利用でき、Rook上で利用するための手順はストレージの種類ごとに異なります。今回はこの中からブロックストレージを選択し、以下の手順をなぞりました。

ここではstorageclass-test.yamlをデプロイします。このyamlファイルにはStorageClassの他にCephBlockPoolも定義されています。

StorageClassはKuberneteにおいてVolumeのdynamic provisioningを実現するために利用されるリソースで、ここではprovisionerをrook-ceph.rbd.csi.ceph.comに指定しています。

CephBlockPoolはCephで利用するブロックストレージ向けのストレージプールです。ここではfailureDomain (データを分散させる単位)がhostとなっており、replicated.sizeが1に設定されています。このままでは1つのホストにOSDを一つずつデプロイしようとしますが、今回の環境ではKubernetesのworkerノードは2つなので、それに合わせるようにyamlファイルの内容を一部修正し、デプロイします。

※参考ドキュメント:

Rook Docs - Ceph Block Pool CRD (ver 0.9)

# sizeを2に変更 [root@kube-master01 ceph]# sed -e 's/size: 1/size: 2/' csi/rbd/storageclass-test.yaml | kubectl apply -f - cephblockpool.ceph.rook.io/replicapool created storageclass.storage.k8s.io/rook-ceph-block created [root@kube-master01 ceph]# [root@kube-master01 ceph]# kubectl get storageclass NAME PROVISIONER AGE rook-ceph-block rook-ceph.rbd.csi.ceph.com 15s [root@kube-master01 ceph]# kubectl get -n rook-ceph cephblockpools.ceph.rook.io replicapool NAME AGE replicapool 36h

デプロイ後のCephクラスターの状態を確認してみます。

# ceph status

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph status

cluster:

id: a6d2d8ec-3050-4dac-a9b6-f3521e476e3e

health: HEALTH_OK

services:

mon: 1 daemons, quorum a (age 17m)

mgr: a(active, since 15m)

osd: 2 osds: 2 up (since 17m), 2 in (since 32h)

data:

pools: 1 pools, 8 pgs

objects: 8 objects, 35 B

usage: 26 GiB used, 49 GiB / 75 GiB avail

pgs: 8 active+clean

[root@kube-master01 ceph]#

# ceph health

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph health

HEALTH_OK

[root@kube-master01 ceph]#

# ceph df

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 75 GiB 49 GiB 26 GiB 26 GiB 34.08

TOTAL 75 GiB 49 GiB 26 GiB 26 GiB 34.08

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

replicapool 1 35 B 8 35 B 0 19 GiB

[root@kube-master01 ceph]#

# ceph mon stat

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph mon stat

e1: 1 mons at {a=[v2:10.104.28.68:3300/0,v1:10.104.28.68:6789/0]}, election epoch 19, leader 0 a, quorum 0 a

[root@kube-master01 ceph]#

# ceph osd stat

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd stat

2 osds: 2 up (since 16m), 2 in (since 32h); epoch: e86

[root@kube-master01 ceph]#

# ceph osd status

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd status

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | kube-worker02 | 16.2G | 21.1G | 0 | 0 | 0 | 0 | exists,up |

| 1 | kube-worker01 | 9455M | 28.2G | 0 | 0 | 0 | 0 | exists,up |

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

[root@kube-master01 ceph]#

# ceph osd tree

[root@kube-master01 ceph]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07318 root default

-3 0.03659 host kube-worker01

1 hdd 0.03659 osd.1 up 1.00000 1.00000

-2 0.03659 host kube-worker02

0 hdd 0.03659 osd.0 up 1.00000 1.00000

[root@kube-master01 ceph]#

storage-test.yamlも貼っておきます。

storageclass-test.yaml

apiVersion: ceph.rook.io/v1 kind: CephBlockPool metadata: name: replicapool namespace: rook-ceph spec: failureDomain: host replicated: size: 1 --- apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: rook-ceph-block provisioner: rook-ceph.rbd.csi.ceph.com parameters: # clusterID is the namespace where the rook cluster is running # If you change this namespace, also change the namespace below where the secret namespaces are defined clusterID: rook-ceph # Ceph pool into which the RBD image shall be created pool: replicapool # RBD image format. Defaults to "2". imageFormat: "2" # RBD image features. Available for imageFormat: "2". CSI RBD currently supports only `layering` feature. imageFeatures: layering # The secrets contain Ceph admin credentials. These are generated automatically by the operator # in the same namespace as the cluster. csi.storage.k8s.io/provisioner-secret-name: rook-ceph-csi csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph csi.storage.k8s.io/node-stage-secret-name: rook-ceph-csi csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # Specify the filesystem type of the volume. If not specified, csi-provisioner # will set default as `ext4`. csi.storage.k8s.io/fstype: ext4 # uncomment the following to use rbd-nbd as mounter on supported nodes #mounter: rbd-nbd reclaimPolicy: Delete

テスト用アプリケーションのデプロイ

ここまででRook-Ceph(ブロックストレージ)の利用環境が整ったので、アプリケーションからストレージを利用してみます。公式ドキュメントではWordpressをデプロイする例を紹介していますので、そちらを試します。

なお、テスト用yamlファイルでは20Giを割り当てるように定義されていますが、デプロイを素早く完了するため1Giに変更してデプロイしています。

# MySQLデプロイ [root@kube-master01 kubernetes]# sed -e 's/storage: 20Gi/storage: 1Gi/' mysql.yaml | kubectl apply -f - service/wordpress-mysql created persistentvolumeclaim/mysql-pv-claim created deployment.apps/wordpress-mysql created [root@kube-master01 kubernetes]# # デプロイ後確認 [root@kube-master01 kubernetes]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 76d wordpress-mysql ClusterIP None <none> 3306/TCP 10s [root@kube-master01 kubernetes]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mysql-pv-claim Bound pvc-9bf0a057-aed9-4404-8cc5-4cd90e599a4f 1Gi RWO rook-ceph-block 15s [root@kube-master01 kubernetes]# kubectl get pods NAME READY STATUS RESTARTS AGE wordpress-mysql-6cc97b86fc-wxsls 1/1 Running 0 19s [root@kube-master01 kubernetes]# # Wordpressデプロイ [root@kube-master01 kubernetes]# sed -e 's/storage: 20Gi/storage: 1Gi/' wordpress.yaml | kubectl apply -f - service/wordpress created persistentvolumeclaim/wp-pv-claim created deployment.apps/wordpress created [root@kube-master01 kubernetes]# # デプロイ後確認 [root@kube-master01 kubernetes]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 76d wordpress LoadBalancer 10.103.145.117 192.168.1.240 80:32538/TCP 6s wordpress-mysql ClusterIP None <none> 3306/TCP 61s [root@kube-master01 kubernetes]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE mysql-pv-claim Bound pvc-9bf0a057-aed9-4404-8cc5-4cd90e599a4f 1Gi RWO rook-ceph-block 70s wp-pv-claim Bound pvc-57b514d7-8cde-4267-ae0a-292f3d0dc204 1Gi RWO rook-ceph-block 15s [root@kube-master01 kubernetes]# kubectl get pods NAME READY STATUS RESTARTS AGE wordpress-8968cd5b6-9t7rs 1/1 Running 0 24s wordpress-mysql-6cc97b86fc-wxsls 1/1 Running 0 79s [root@kube-master01 kubernetes]#

無事にアプリケーションのデプロイに成功しました。

最後にデプロイ後のCephクラスターの状態を確認します。

# ceph status

[root@kube-master01 kubernetes]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph status

cluster:

id: a6d2d8ec-3050-4dac-a9b6-f3521e476e3e

health: HEALTH_OK

services:

mon: 1 daemons, quorum a (age 26m)

mgr: a(active, since 4m)

osd: 2 osds: 2 up (since 26m), 2 in (since 32h)

data:

pools: 1 pools, 8 pgs

objects: 87 objects, 247 MiB

usage: 26 GiB used, 49 GiB / 75 GiB avail

pgs: 8 active+clean

[root@kube-master01 kubernetes]#

# ceph health

[root@kube-master01 kubernetes]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph health

HEALTH_OK

[root@kube-master01 kubernetes]#

# ceph df

[root@kube-master01 kubernetes]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 75 GiB 49 GiB 26 GiB 26 GiB 34.72

TOTAL 75 GiB 49 GiB 26 GiB 26 GiB 34.72

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

replicapool 1 247 MiB 87 247 MiB 0.63 19 GiB

[root@kube-master01 kubernetes]#

# ceph mon stat

[root@kube-master01 kubernetes]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph mon stat

e1: 1 mons at {a=[v2:10.104.28.68:3300/0,v1:10.104.28.68:6789/0]}, election epoch 19, leader 0 a, quorum 0 a

[root@kube-master01 kubernetes]#

# ceph osd stat

[root@kube-master01 kubernetes]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd stat

2 osds: 2 up (since 26m), 2 in (since 32h); epoch: e86

[root@kube-master01 kubernetes]#

# ceph osd status

[root@kube-master01 kubernetes]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd status

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | kube-worker02 | 16.5G | 20.9G | 0 | 0 | 0 | 0 | exists,up |

| 1 | kube-worker01 | 9693M | 27.9G | 0 | 0 | 0 | 0 | exists,up |

+----+---------------+-------+-------+--------+---------+--------+---------+-----------+

[root@kube-master01 kubernetes]#

# ceph osd tree

[root@kube-master01 kubernetes]# kubectl exec -it -n rook-ceph rook-ceph-tools-7cf4cc7568-zq5gk -- ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07318 root default

-3 0.03659 host kube-worker01

1 hdd 0.03659 osd.1 up 1.00000 1.00000

-2 0.03659 host kube-worker02

0 hdd 0.03659 osd.0 up 1.00000 1.00000

[root@kube-master01 kubernetes]#

まとめ

今回はCloud Native Storageを実現するRookについて、簡単に紹介しました。RookはあくまでOperatorとして機能するので、その下で動いているストレージについて理解がなければ、正しく利用することはできません。今回はCephを利用しましたが、Cephについての理解が十分でないこともあり、そちらについてはあまり深く探ることができませんでした。ただ、作業中にVirtualboxを再起動した時などで自動的にCephクラスターが立ち上がってくる様子などを見て、Kubernetesリソースと同じように扱っている感覚になり、ストレージリソースをKubernetesリソースとして扱うことの利点は感じることができました。

またRookの動きを理解するには、Kubernetes Operatorとは何かについて把握する必要もあります。以前Kubevirtを触った時にも思いましたが、Operatorが普及してきたことで共有リソースとしてOperatorが利用される機会が増え、開発者だけでなく利用者にも、Operatorに関する知識が求められていると感じました。

参考ドキュメント

CNCF - Introduction to Cloud Native Storage

StorageOS - StorageOS Vision for Cloud Native Storage for Today’s Modern IT

赤帽エンジニアブログ - ストレージオーケストレーター Rook : 第1話 Rook大地に立つ!!

赤帽エンジニアブログ - ストレージオーケストレーター Rook : 第4話 追撃! トリプル・ポッド